Today AI chip startup Groq announced that their new processor has achieved 21,700 inferences per second (IPS) for ResNet-50 v2 inference. Groq’s level of inference performance exceeds that of other commercially available neural network architectures, with throughput that more than doubles the ResNet-50 score of the incumbent GPU-based architecture. ResNet-50 is an inference benchmark for image classification and is often used as a standard for measuring performance of machine learning accelerators.

Today AI chip startup Groq announced that their new processor has achieved 21,700 inferences per second (IPS) for ResNet-50 v2 inference. Groq’s level of inference performance exceeds that of other commercially available neural network architectures, with throughput that more than doubles the ResNet-50 score of the incumbent GPU-based architecture. ResNet-50 is an inference benchmark for image classification and is often used as a standard for measuring performance of machine learning accelerators.

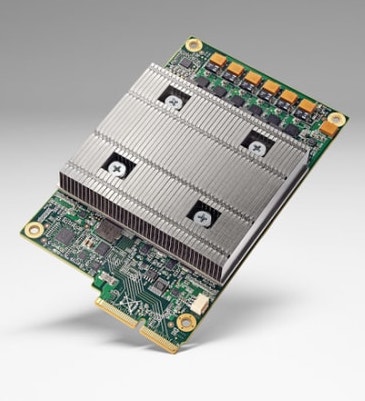

In addition, earlier this week, the Linley Group released its latest Microprocessor Report titled “Groq Rocks Neural Networks,” which concludes that Groq’s “TSP stands out in both peak performance and ResNet-50 throughput,” and that “Groq’s [deep-learning] accelerator is the fastest available on the merchant market.”

These ResNet-50 results are a validation that Groq’s unique architecture and approach to machine learning acceleration delivers substantially faster inference performance than our competitors,” said Jonathan Ross, Groq’s co-founder and CEO. “These real-world proof points, based on industry-standard benchmarks and not simulations or hardware emulation, confirm the measurable performance gains for machine learning and artificial intelligence applications made possible by Groq’s technologies.”

Significantly, the Groq platform doesn’t require large batch sizes for optimal inference processing performance, with the TSP architecture achieving peak throughput even at batch size 1, when processing a single image at a time. The ability to quickly and efficiently process small batch sizes is especially important to minimize latency in real-time applications. While Groq’s architecture is up to 2.5 times faster than GPU-based platforms at large batch sizes, its tensor streaming processor is up to 17 times faster at batch size 1.

With the Groq architecture providing more than a 2x performance advantage over GPU-based solutions, engineering managers can deploy processing platforms that offer twice the inference performance without doubling infrastructure costs. Reducing the number of deployed systems can also save datacenter space, lower power usage, and decrease system complexity overall.

Sign up for our insideHPC Newsletter