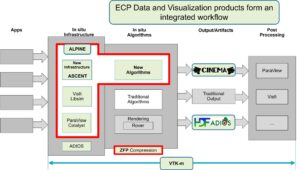

With the advent of the exascale supercomputing era, computational scientists can run simulations at higher resolutions, add more detailed physical phenomena, increase the size of the physical problems, and couple multiple codes spanning both physical and temporal scales. These exascale simulations generate ever-increasing amounts of data. The Data and Visualization efforts in the US Department of Energy’s (DOE’s) Exascale Computing Project (ECP) provide an ecosystem of capabilities for data management, analysis, lossy compression, and visualization that enables scientists to extract insight from these simulations while minimizing the amount of data that must be written to long-term storage (Figure 1).

Big data is the conceptual link between the separate ALPINE and zfp projects that comprise the joint ALPINE/zfp ECP effort. The ALPINE project focuses on both post hoc and in situ infrastructures. The in situ approach delivers visualization, data analysis, and data reduction capabilities while the simulation is running. This approach takes the human out of the loop and can potentially move much of the post hoc analysis or visualization tasks from post hoc to in situ. ALPINE also has supported the development of a range of analysis algorithms over the course of the ECP. These algorithms often have the goal or side benefit of data reduction. The zfp project addresses the compute and I/O mismatch through floating point compression algorithms.

source: Exascale Computing Project