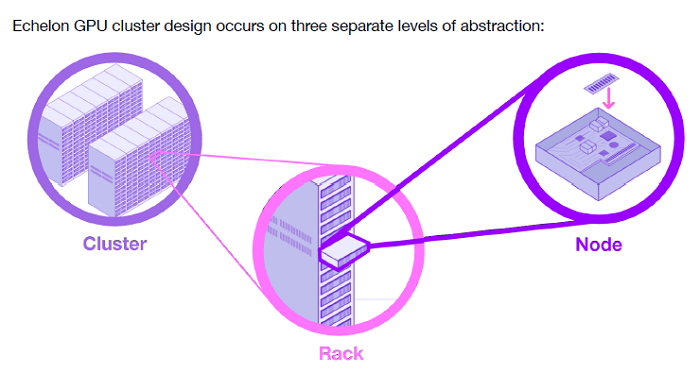

In this whitepaper, “Deep Learning GPU Cluster,” our friends over at Lambda walk you through the Lambda Echelon multi-node cluster reference design: a node design, a rack design, and an entire cluster level architecture. This document is for technical decision-makers and engineers. You’ll learn about the Echelon’s compute, storage, networking, power distribution, and thermal design. This is not a cluster administration handbook, this is a high level technical overview of one possible system architecture.

In this whitepaper, “Deep Learning GPU Cluster,” our friends over at Lambda walk you through the Lambda Echelon multi-node cluster reference design: a node design, a rack design, and an entire cluster level architecture. This document is for technical decision-makers and engineers. You’ll learn about the Echelon’s compute, storage, networking, power distribution, and thermal design. This is not a cluster administration handbook, this is a high level technical overview of one possible system architecture.

The whitepaper includes the following sections:

- Lambda Echelon

- Use Cases

- Echelon Design

- Cluster Design

- Rack Level Design

- Node Level Design

- Software

- Support

- About Lambda

- Let’s build one for you

- Appendix A: Use Case Descriptions

Lambda provides GPU accelerated workstations and servers to the top AI research labs in the world. The company’s hardware and software is used by AI researchers at Apple, Intel, Microsoft, Tencent, Stanford, Berkeley, University of Toronto, Los Alamos National Labs, and many others.

Download this white paper, “Deep Learning GPU Cluster” to learn more about the Lambda Echelon multi-node cluster. Lambda is ready to design a Lambda Echelon for your team.