Members of the NERSC Efficiency Optimization Project team (left to right): Norm Bourassa and Jeff Broughton of NERSC; John Elliott and Deidre Carter of Sustainable Berkeley Lab; and Walker Johnson of kW Engineering. Not pictured: Brent Draney, Jeff Grounds, and Ernest Jew of NERSC; Mark Friedrich, Berkeley Lab.

Finding ways to improve energy efficiency in HPC data centers is not a new challenge but it is an evolving one, driven by increasing data creation, changes in the world’s climate, and pressure to reduce energy consumption and carbon footprints. Together these factors dramatically influence the design and maintenance of HPC facilities and the hardware and software resources within them.

Fortunately, for decades Lawrence Berkeley National Laboratory (Berkeley Lab) has been at the forefront of efforts to design, build, and optimize energy-efficient hyperscale data centers. The Energy Technologies Area (ETA) has supported the National Energy Scientific Research Computing Center (NERSC) and other Berkeley Lab facilities on energy issues for some 30 years, according to Rich Brown, a research scientist in ETA’s Building Technologies and Urban Systems Division. In 2007, for example, Berkeley Lab was lead author on a U.S. Environmental Protection Agency (EPA) study of the national impact of data center energy use and on the resulting DOE report. In addition, ETA has been involved in EPA’s Energy Star program for more than 25 years, developing specifications for servers, data storage, and network equipment.

Berkeley Lab has been the lead institution in the U.S. on data center energy use research,” Brown said. “ETA is an applied science division, and the mission here is to reduce the environmental impacts of energy use.” Much of this work has been funded through the Federal Energy Management Program, including establishing a Center of Excellence at Berkeley Lab that serves as a repository of knowledge and consulting for federal agencies on data centers in energy and water management, Brown noted.

More recently, this expertise has been applied to the design, construction, and operation of Berkeley Lab’s Shyh Wang Hall and the computing, storage, and networking systems hosted in the building’s data center. These efforts also led to the NERSC Efficiency Optimization Project, a collaboration between NERSC, ETA, and Sustainable Berkeley Lab that is applying emerging operational data analytics (ODA) methods to optimize cooling systems and save electricity.

“There are strong ties between NERSC, the Energy Sciences Network (ESnet), and the research community in ETA,” Brown said. “Berkeley Lab facilities have traditionally been a place where we can apply some of these findings and work with facilities managers to see how these ideas work in a large institution.”Continuous Data Analytics

In 2015, the Computing Sciences Area, including NERSC and ESnet, moved into the newly constructed Shyh Wang Hall, which was designed from the get-go to be highly energy efficient (the building received LEED Gold certification in 2017). One of the key performance metrics for any data center is power utilization effectiveness (PUE), which measures the ratio of total data center electricity draw to the electricity used directly for computing only. Shyh Wang Hall was designed to have a target PUE of less than 1.1. This means the support and cooling of the computing equipment was just 10% or less of the direct computing energy use, putting NERSC on par with the most efficient data-processing facilities in the world.

But the Berkeley Lab team felt there was room for improvement. So NERSC, ETA, and Sustainable Berkeley Lab set out to find additional ways to further optimize performance. In 2016, working with the mechanical engineering firm kW Engineering, a number of potential savings opportunities were identified, and the team began analyzing and applying the metrics to various systems and processes in the NERSC data center, according to John Elliott, chief sustainability officer at Berkeley Lab.

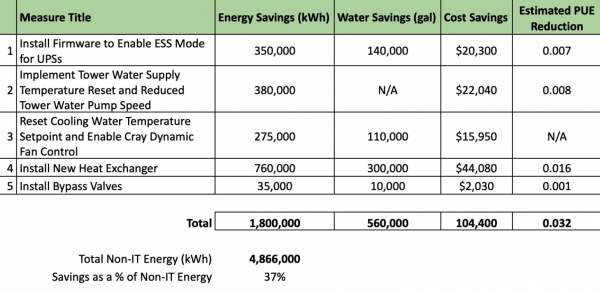

A lot of this has been about optimizing systems and controls for different ranges of outside weather conditions,” he said. “By putting the lowest energy-consuming portion of the plant in the lead, we’ve been able to save quite a bit of energy.” To date the overall energy savings realized is about 37% of the non-IT electricity consumption at NERSC (see table). In addition, the project has saved 1.8 million kWh and more than a half million gallons of water per year.

The project has so far saved NERSC 1.8 million kWh and over a half million gallons of water per year, and established ongoing monitoring to provide NERSC with tools to protect these savings over time.

These efforts included implementing all of the metrics into a system called SkySpark, which collects and analyzes building data and sets up calculated points derived from that data. SkySpark works in conjunction with NERSC’s OMNI data collection system, a platform of applications that combines HPC and IT systems data with cooling and facility systems performance data from the Building Management System, plus supplemental rack-level IT sensors and computer syslog data. Together, these systems enable NERSC’s Energy Efficiency team to use sophisticated ODA tools to continuously monitor operations and implement energy efficiency reliability measures. The initial emphasis has been on the facility’s cooling systems.

For example, most facilities calculate PUE as an annual average. But NERSC now has the ability to consistently calculate this metric every 15 minutes. “And when you have that,” Elliott said, “you start being able to ask new kinds of questions about how to optimize the systems.”

Active Duplex Communication

While much of the savings are from optimizing controls, additional savings have come from relatively minor capital improvements, Elliott noted – including installing a heat exchanger in parallel to the closed cooling loop, which reduced pressure drop and significantly improved efficiency.

“The whole point of energy efficiency is that you still get 100% of the service that you want from your systems – it’s just going to use less electricity in the process,” said Norm Bourassa, a building systems and energy engineer in NERSC’s Building Infrastructure Group who has been instrumental in guiding this project, including presenting a case study on the team’s work at the 2019 International Conference on Parallel Processing in Kyoto, Japan.

And it involves more than just the facility cooling plant, Bourassa added. For example, one of the project’s more exciting projects so far is the creation of active communications between NERSC’s Cori supercomputer’s internal blower fans and the cooling towers and pumps of the traditional cooling plant. The Cray XC Dynamic Fan Speed Control feature automatically varies the cabinet blower fan speeds based on processor temperatures. This new ODA link method provides continuous feedback, which enables the Cray system and building controls to shift the load ratio between the blower fans and the cooling water loop depending on outdoor environmental conditions.

“This is one of the first practical implementations of a duplex communication method, where the fans can go to the plant and ask ‘what temperature is the water right now’ and then determine how to adjust their internal settings accordingly,” Bourassa said. “And they are in constant communication every 15 minutes so that they are always working together.”

This kind of internal communications between systems and facilities is the wave of the future, he added.

Up to this point, facilities and compute systems in data centers have been highly siloed,” he said. “But we’re moving into the exascale era where that boundary has to be broken. That’s been one of the most interesting things about this project: the opportunity to merge the compute systems and the facility plant silos into a unified holistic system and thus advance the state of computing science.”

Machine learning will also one day play a role, with the NERSC OMNI system providing years of data that can be used as training datasets, eventually enabling an automated ongoing optimization of plant settings in real time.

“In the march to exascale computing, there are real questions about the hard limits you run up against in terms of energy consumption and cooling loads,” Elliott said. “NERSC is very interested in optimizing its facilities to be leaders in energy-efficient HPC.”

Source: Kathy Kincade at Berkeley Lab