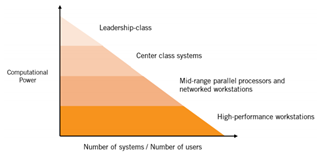

The Center for Data Innovation (CDI), a non-profit think tank that studies the intersection of data, technology and public policy, has gone to bat for increased federal funding for ‘center class’ and mid-range HPC systems, contending that “a decade of funding cuts at the National Science Foundation (NSF) has left the United States with an imbalanced portfolio of HPC resources that is failing to meet growing demand among AI researchers who need large amounts of computing power to solve data intensive problems in such fields as engineering, biology, and computer science.”

The Center for Data Innovation (CDI), a non-profit think tank that studies the intersection of data, technology and public policy, has gone to bat for increased federal funding for ‘center class’ and mid-range HPC systems, contending that “a decade of funding cuts at the National Science Foundation (NSF) has left the United States with an imbalanced portfolio of HPC resources that is failing to meet growing demand among AI researchers who need large amounts of computing power to solve data intensive problems in such fields as engineering, biology, and computer science.”

In a report released today, CDI called for tripling NSF’s HPC funding— to at least $500 million annually over the next five years — and recommended that NSF target these investments toward mid-range HPC systems in states with low HPC availability but high levels of AI research. The report similarly calls for a three-fold increase in DOE’s funding for HPC systems to at least $1.5 billion annually over five years.

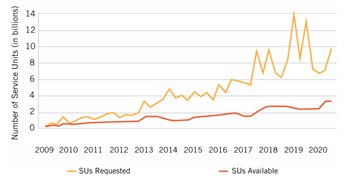

A core CDI argument is that DOE HPC investments “have tilted toward hardware, software, and expertise that is geared for users with the largest computational needs.” While these investments have increased by approximately 90 percent since 2010, CDI policy analyst and report author Hodan Omaar stated, “there is still three times more demand for access to the HPC resources than DOE has been able to support.”

CDI backed that statement by citing demand-supply data from DOE’s Innovative and Novel Computational Impact on Theory and Experiment (INCITE) and NSF’s Extreme Science and Engineering Discovery Environment (XSEDE) HPC resource allocation programs,

“Increasing access to HPC for researchers exploring new applications of AI will involve increasing access to different parts of HPC systems, including hardware, software, and expertise, for users with large computational needs and the ‘the long tail’ of users with more modest HPC needs that represent, in aggregate, the majority of AI researchers,” wrote Omaar. “The Department of Energy (DOE) primarily invests in the former and has increased its investments in data-intensive, large-scale HPC resources over the last decade by almost 90 percent, from $277 million in 2010 to $538 million (in constant 2010 dollars) in 2019. In contrast, the National Science Foundation (NSF), which is the primary source of HPC investments for the latter, has decreased its HPC funding by approximately 50 percent, from $325 million in 2010 to $167 million in 2019. This discrepancy has led to a U.S. HPC portfolio weighted toward very powerful systems that can only support a smaller number of researchers. However, both funding sources fail to meet current demand.”

Comparison of requested XSEDE service units (SUs) to available SUs (source: CDI)

Among its other recommendations “to rebalance the country’s HPC portfolio,” the CDI said:

- NSF and DOE should increase access to HPC resources for minority-serving institutions.

- NSF and DOE should increase access to HPC resources for women.

- NSF should provide funding to develop HPC curricula at two-year colleges that enable seamless transfer into four-year colleges.

- NSF should diversify the portfolio of HPC resources it makes available to AI researchers.

- NSF should establish and publish roadmaps that articulate what future investments it will make.

- NSF should foster more public-private partnerships.

- DOE and NSF should ensure grantees are using HPC resources wisely and efficiently.

“Without sufficient access to HPC, researchers in a diverse range of science and engineering fields can’t address important research challenges,” said Omaar. “HPC has fueled the development of cutting-edge AI applications such as natural language processing and machine learning. But limited availability of HPC resources hampers researchers’ ability to develop new products and services that will be vital for maintaining U.S. competitiveness and national security. It is critical for researchers to apply AI to everything from defense innovation to societal challenges such as health care and climate change.”