[SPONSORED GUEST ARTICLE] Meta (formerly Facebook) has a corporate culture of aggressive technology adoption, particularly in the area of AI and adoption of AI-related technologies, such as GPUs that drive AI workloads. Members of a virtual reality research project found themselves in need of greater GPU-driven compute power but ran into limitations and roadblocks common in the IT industry: limited GPU server density and limited GPU availability, hindering their ability to efficiently meet growing workload intensity.

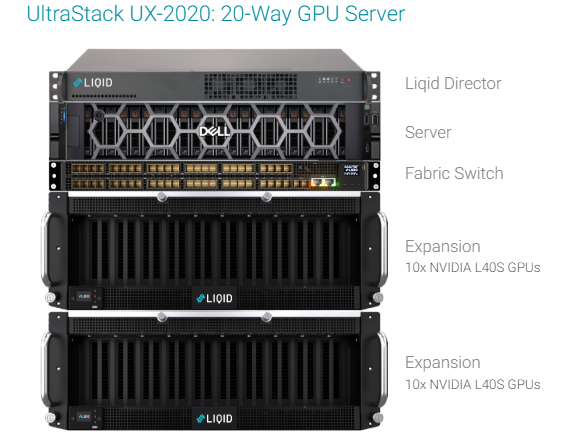

In response Meta turned to Liqid, a pioneer in composable disaggregated infrastructure. Last November at the SC23 supercomputing conference, Liqid announced the UltraStack server solution featuring 2U Dell PowerEdge servers with up to 20 NVIDIA L40S GPUs, designed to meet high GPU density demands. UltraStack can help accelerate next-generation workloads by delivering 35 percent higher performance, while ensuring cost savings with a 35 percent reduction in power consumption and a 75 percent reduction in software licensing costs when compared to lower GPU capacity servers.

In this video interview, we spoke with Meta Staff Research Engineer Vasu Agrawal, who shares insights about a VR project he’s involved with at Meta, the role of Liqid UltraStack with NVIDIA L40S GPUs and Dell PowerEdge servers and how it has delivered performance improvements over its previous compute capability, significantly advancing the project.

The core advantage of UltraStack lies in its ability to seamlessly and transparently connect large quantities of GPUs to a standard server. In so doing, UltraStack addresses three key challenges in AI computing:

Cost and Complexity – Many AI deployment plans are hindered by inadequate GPU density, typically ranging from four to eight GPUs per server. Distributing GPUs across several servers increases power, cooling, and space costs while adding system management complexity. UltraStack addresses those problems with a solution that incorporates up to 20 GPUs per server.

Time – The AI industry faces wait times for high-performance GPUs, a major concern in the way of timely and efficient AI infrastructure implementation. The ready availability of NVIDIA L40S GPUs addresses this challenge.

Time – The AI industry faces wait times for high-performance GPUs, a major concern in the way of timely and efficient AI infrastructure implementation. The ready availability of NVIDIA L40S GPUs addresses this challenge.

Performance –Dispersing GPUs across multiple servers can reduce computational efficiency and performance, key for intensive AI workloads. But with up to 20 GPUs per server, UltraStack delivers higher performance:

- MLPerf 3.1 Inference LLM (Large Language Model) Results with 16x L40S GPUs: UltraStack scored an impressive 94 queries/sec at ~7,000W.

- Additional MLPerf 3.1 Inference tests with 16x L40S GPUs showed:

- Object Detection: 10,104 queries/sec

- Medical Imaging: 62.6 queries/sec

- Natural Language Processing: 44,730 queries/sec

- The full performance data is available in the whitepaper, downloadable here.

UltraStack is available as stand-alone and cluster-ready servers options. While the stand-alone options contains up to 20 GPUs, the cluster-ready servers include up to 16 L40S GPUs, in addition to Liqid IO Accelerator NVMe SSDs, NVIDIA ConnectX-7 NICs and NVIDIA BlueField-3 DPUs, for internode connectivity and efficiency in complex AI environments.

Speak Your Mind